Now Reading: AI Agent Architecture: Powerful Insights into Sensors, Reasoning, and Decision Layers

-

01

AI Agent Architecture: Powerful Insights into Sensors, Reasoning, and Decision Layers

AI Agent Architecture: Powerful Insights into Sensors, Reasoning, and Decision Layers

Table of Contents

This post is part of our ongoing AI Agent Series on DailyTechRadar. If you missed the earlier posts, start here with the Series Overview.

Previous post: Types of AI Agents Explained

Next in the series: [Coming Soon → Building Learning Agents and Their Use Cases]

Introduction: Why Understanding AI Agent Architecture Matters

While many people understand the concept of artificial intelligence on the surface, fewer appreciate the engineering brilliance that powers it from within. At the core of every intelligent decision, self-driven action, and adaptive behavior lies a robust structure—AI agent architecture.

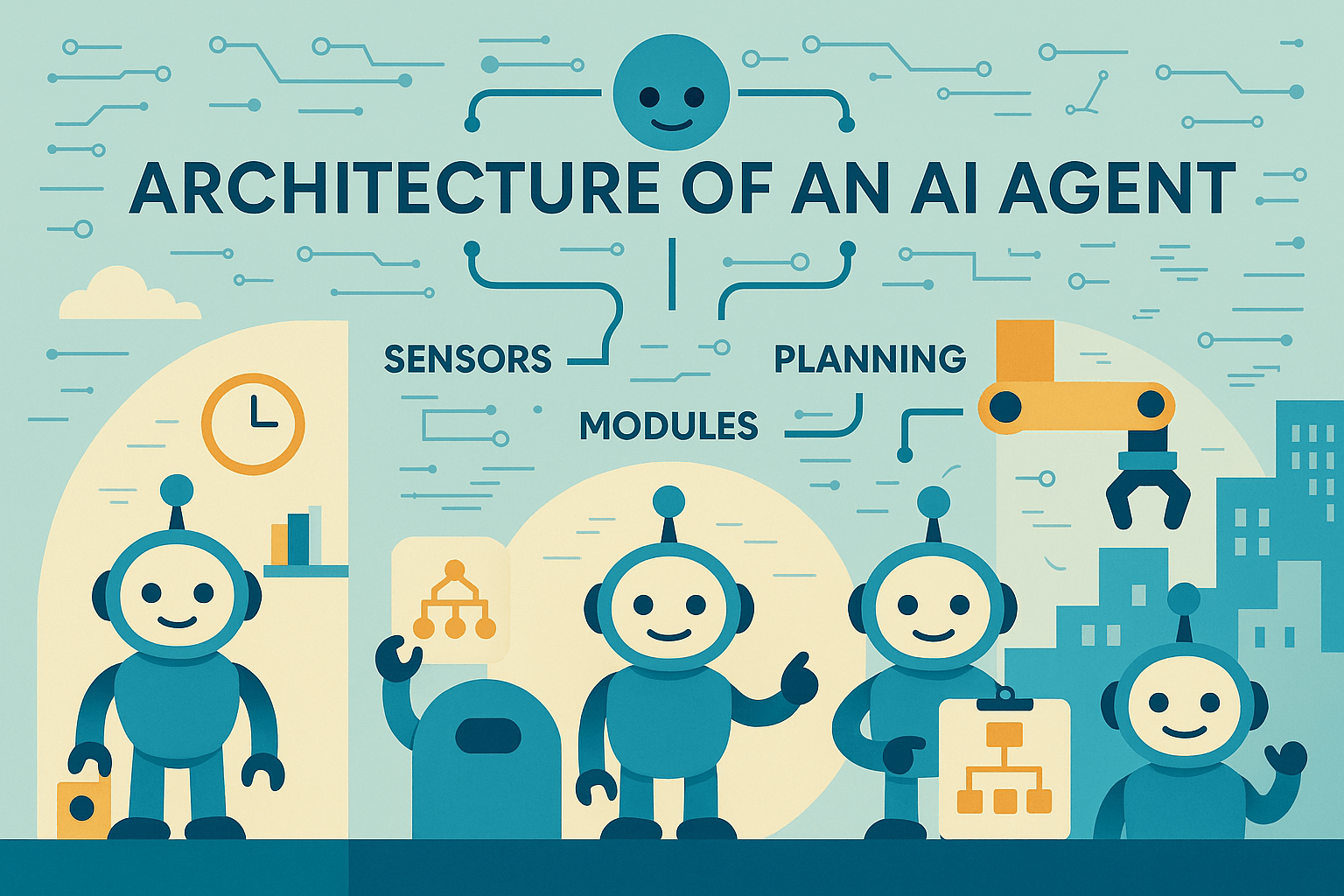

In this post, we break down how AI agents are architected, from sensor input and decision-making modules to planning and execution layers, all driven by a continuous interaction loop between the agent and its environment.

If you want to go beyond the buzzwords and see what makes an AI agent truly “intelligent,” you’re in the right place.

AI Agents, Recapped Briefly

If you’re just joining us, an AI agent is an autonomous system that senses its environment, makes decisions, and performs actions to achieve specific goals. These agents differ in complexity—from simple reactive bots to advanced learning systems.

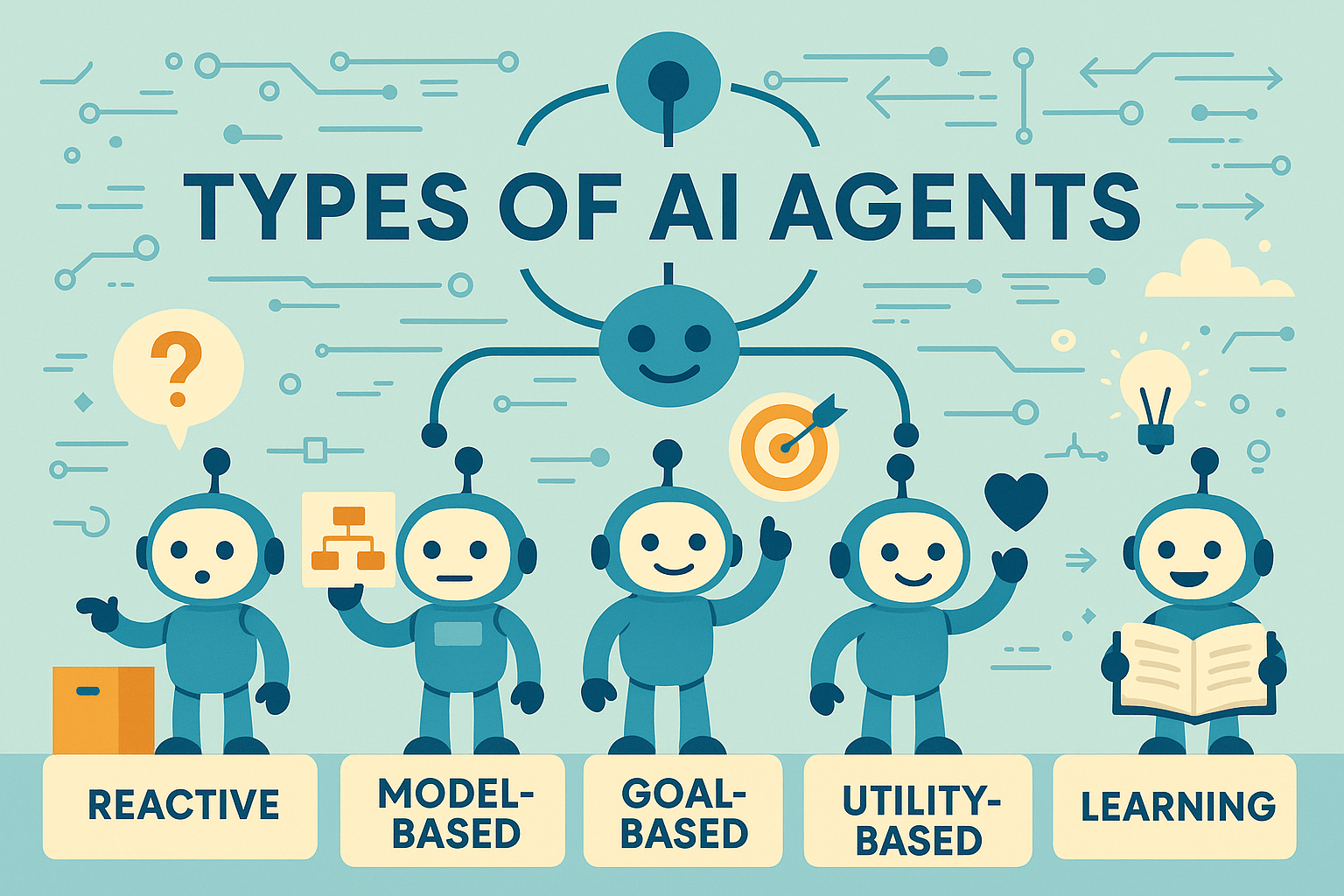

In our last post on the Types of AI Agents, we explored various categories like reactive, goal-based, utility-based, and learning agents. In this post, we’ll focus on what’s under the hood—how the agent is designed structurally to function.

What Is AI Agent Architecture?

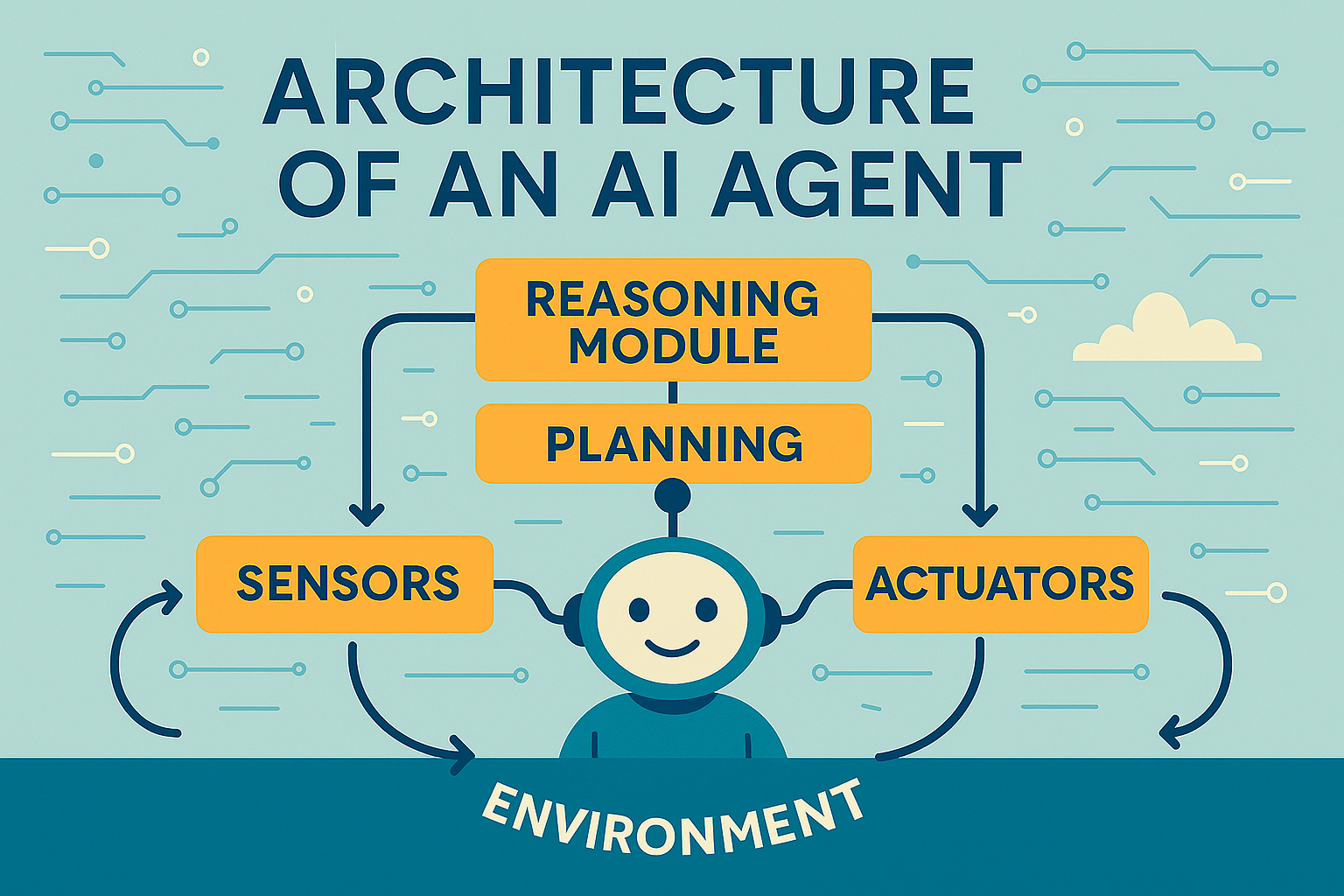

AI agent architecture refers to the structural framework that enables an agent to function intelligently. It determines how an agent:

- Perceives the environment through sensors,

- Makes decisions using reasoning modules,

- Acts using actuators, and

- Learns through feedback over time.

At the heart of this architecture is a simple but powerful cycle: Perceive → Decide → Act → Learn.

The Agent-Environment Interaction Loop

Every intelligent agent operates in a constant feedback loop with its environment. Here’s how this loop works:

- Perceive: The agent observes the environment through sensors (e.g., camera, microphone).

- Decide: The internal decision-making module processes the input and decides the best course of action.

- Act: The agent interacts with the environment via actuators (e.g., motors, displays, speakers).

- Learn: (Optional but critical) Based on feedback, the agent adapts its future actions.

This loop is the foundation of autonomy and adaptability in AI agents.

Core Components of AI Agent Architecture

Let’s break down the internal parts of this architecture.

1. Sensors (Input Layer)

Sensors are the entry points through which agents gather data about their surroundings. Depending on the use case, these can include:

- Visual sensors (e.g., cameras, lidar)

- Audio sensors (e.g., microphones)

- Touch sensors (e.g., pressure or vibration)

- Environmental sensors (e.g., temperature, proximity)

These components help form a perception model that gives the agent a sense of the world.

2. Reasoning or Processing Module (Decision Layer)

This is the brain of the agent—where decisions are made. It may include:

- Rule-based systems (e.g., decision trees, if-then logic)

- Planning algorithms (e.g., search, A*, constraint solving)

- Machine learning models (e.g., neural networks, reinforcement learning)

The complexity here depends on the agent’s type. For instance, a learning agent will use dynamic models to adapt, whereas a reactive agent may just respond based on static rules.

3. Actuators (Output Layer)

Actuators are responsible for taking action. This could mean:

- A robot arm moving objects

- A virtual assistant speaking or typing responses

- A software agent making a database change or sending alerts

Actuators close the loop by delivering the result of the agent’s decision to the real world.

Planning and Execution Layers

More advanced agents divide their architecture into planning and execution subsystems.

Planning Layer

- Goal analysis: What does the agent want to achieve?

- Strategy development: Which path gets us there efficiently?

- Scenario simulation: What are the risks, rewards, and outcomes?

This layer simulates multiple options before acting.

Execution Layer

- Action selection: Executes the chosen plan

- Monitoring: Evaluates real-time progress

- Adjustments: Responds to deviations using updated input

Separating planning from execution helps improve adaptability and scalability, especially in dynamic environments like robotics or autonomous vehicles.

Real-World Examples of AI Agent Architectures

Let’s look at some practical implementations where different architectures shine:

| Use Case | Agent Type | Key Architecture Features |

|---|---|---|

| Smart Thermostat | Reactive Agent | Basic sensor-actuator loop |

| Warehouse Robot | Model-Based Agent | Includes map-based memory + proximity sensors |

| Navigation System | Goal-Based Agent | Pathfinding using planning algorithms |

| Stock Trading Bot | Utility-Based Agent | Decision module optimized for highest ROI |

| Self-Driving Car | Learning Agent | Combines sensor fusion, ML, and real-time planning |

Visualizing a Full AI Agent Architecture

To visualize this, imagine a robotic vacuum:

- Sensors: Detect walls, cliffs, and obstacles

- Reasoning Module: Plans cleaning path and avoids collisions

- Actuators: Move wheels, spin brushes, signal low battery

- Learning Module: Remembers efficient paths and adapts over time

It all flows through a structured architecture that mimics intelligent decision-making.

Why Architecture Matters

The architecture determines the capability of the AI agent. Just like a good foundation supports a strong building, well-designed architecture allows agents to:

- Adapt to real-world changes

- Improve over time

- Execute complex tasks efficiently

- Collaborate in multi-agent environments

If you’re planning to design or deploy intelligent systems, understanding architecture is a crucial step.

What’s Next?

In our next post, we’ll dive deeper into Learning Agents—how they improve over time, what algorithms power them, and where they are being used today.

👉 Next in the series: Learning Agents and Their Use Cases →

Until then, feel free to revisit our previous posts: