Now Reading: Google Gemini 2.5 Pro: Ushering in a New Era of AI Intelligence

-

01

Google Gemini 2.5 Pro: Ushering in a New Era of AI Intelligence

Google Gemini 2.5 Pro: Ushering in a New Era of AI Intelligence

Table of Contents

Google has recently unveiled its latest and most advanced artificial intelligence model, Gemini 2.5 Pro, marking a significant milestone in the company’s pursuit of cutting-edge AI technology. This new iteration, the first in the Gemini 2.5 family, is being touted as Google’s “most intelligent AI model” to date and represents a substantial step forward in its ability to reason and understand complex information. Initially made available to Gemini Advanced users, the experimental version of Gemini 2.5 Pro has now been extended to free users, albeit with certain limitations, and is also accessible to developers through Google AI Studio, with plans for integration into Vertex AI in the near future. This widespread availability signals Google’s intention to rapidly disseminate its most powerful AI capabilities across a broad spectrum of users and developers.

What Makes Gemini 2.5 Pro a Game Changer?

The Power of “Thinking” and Enhanced Reasoning

At the heart of Gemini 2.5 Pro’s advancements lies its enhanced reasoning capabilities, often referred to as “thinking” abilities. This refers to the model’s capacity to go beyond mere pattern recognition and engage in more sophisticated cognitive processes. Google elaborates that this involves the ability to analyze information deeply, draw logical conclusions based on that analysis, understand and incorporate context and nuance, and ultimately make well-informed decisions. This focus on reasoning represents a strategic evolution in Google’s AI development. Previously, they introduced “Flash Thinking” with the Gemini 2.0 model, which aimed to balance reasoning with speed. However, with the advent of Gemini 2.5 Pro, Google appears to be moving towards a more unified approach, integrating these advanced reasoning capabilities natively into all future models rather than offering them as a separate feature or brand. This shift suggests a move towards creating more universally intelligent AI systems that can handle increasingly complex tasks with greater accuracy and understanding. The enhanced reasoning allows Gemini 2.5 Pro to deliver more accurate results and achieve superior performance in tackling intricate problems.

Native Multimodality: Understanding Beyond Text

Building upon the foundations laid by its predecessors, Gemini 2.5 Pro boasts native multimodality, enabling it to process and understand information from a variety of sources, including text, images, audio, and video. This capability allows the model to interact with the world in a more comprehensive and human-like manner, as it can simultaneously analyze and integrate information from different sensory inputs. For instance, it can analyze screenshots in conjunction with code to understand the context of a software issue or interpret diagrams and architectural schemas to provide relevant insights. This integrated understanding of diverse data types opens up a wide array of potential applications, from enhanced content creation and more nuanced data analysis to more intuitive and sophisticated human-computer interactions. The ability to process and synthesize information across multiple modalities significantly enhances the model’s understanding of real-world scenarios, where information is rarely presented in a single format.

The Unprecedented Context Window: Processing Massive Information

One of the most remarkable features of Gemini 2.5 Pro is its exceptionally large context window, currently at 1 million tokens with plans to expand it to an even more impressive 2 million tokens in the near future. To put this into perspective, 1 million tokens can roughly equate to 750,000 words or the ability to analyze an entire codebase of approximately 30,000 lines of code, complete with its accompanying documentation. This vast context window dwarfs that of many competing models. For example, OpenAI’s o3-mini and Anthropic’s Claude 3.7 Sonnet typically offer context windows around 200,000 tokens, while DeepSeek R1 has a limit of 128,000 tokens. Currently, Grok 3 is the only other model that matches Gemini 2.5 Pro’s 1 million token capacity. This unprecedentedly large context window has a transformative impact on the model’s capabilities. It enables the analysis of massive amounts of data, the understanding of extremely long documents, and the handling of complex, multi-faceted projects without the need to break them down into smaller segments or rely on complex retrieval augmented generation (RAG) pipelines for maintaining context. This capability streamlines workflows and significantly improves the accuracy and coherence of AI responses by allowing the model to retain and process a much broader understanding of the information at hand.

Superior Performance Across Key Domains

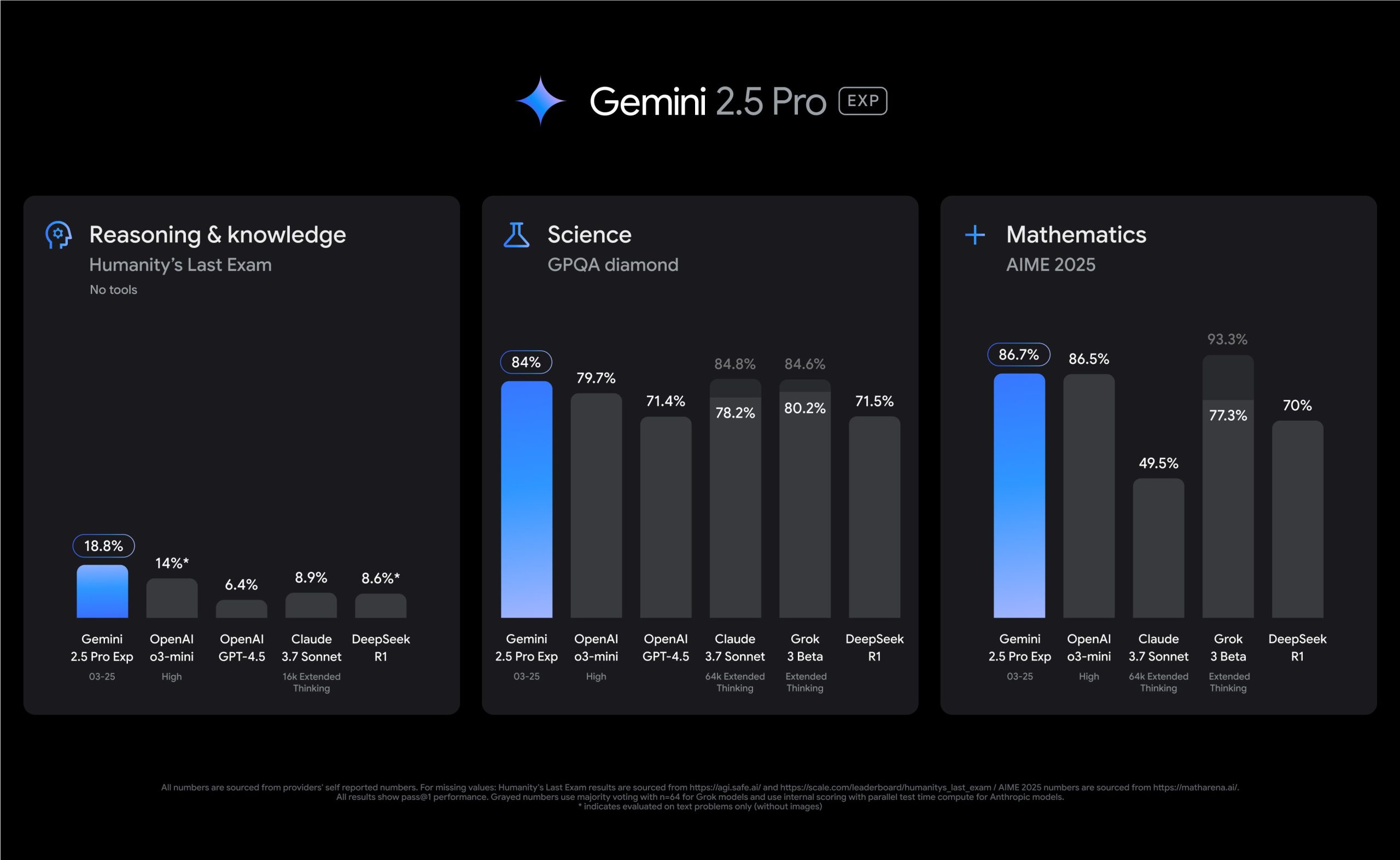

Gemini 2.5 Pro has demonstrated exceptional performance across a variety of critical benchmarks, particularly in areas like coding, reasoning, and mathematics, often achieving industry-leading results. Notably, it currently holds the top position on the LMArena leaderboard, a widely recognized benchmark that ranks large language models based on user feedback, indicating a strong alignment with human preferences and a high-quality output style. In academic reasoning tasks, Gemini 2.5 Pro has shown remarkable proficiency, scoring 86.7% on the AIME 2025 mathematics exam and 84.0% on the GPQA diamond benchmark for science. Furthermore, it achieved a leading score of 18.8% on the challenging Humanity’s Last Exam, a comprehensive test spanning mathematics, science, and humanities, and it did so without employing expensive test-time techniques that can artificially inflate scores.

In the realm of software development, Gemini 2.5 Pro exhibits competitive performance. While it doesn’t always dominate every coding benchmark, it achieved a score of 63.8% on SWE-bench Verified for agentic coding, placing it ahead of several top-tier models, though slightly behind Claude 3.7 Sonnet. On LiveCodeBench v5, which evaluates code generation capabilities, Gemini 2.5 Pro scored 70.4%, and it outperformed Claude 3.7 Sonnet on the Aider Polyglot benchmark for code editing with a score of 74.0%. Google emphasizes the model’s proficiency in creating visually appealing web applications and sophisticated agentic code applications, even from a single line of instruction.

One area where Gemini 2.5 Pro truly shines is in its ability to understand and process information within a long context. It achieved an impressive score of 91.5% on the MRCR benchmark for a 128,000 token context length, significantly surpassing the performance of competitors like o3-mini and GPT-4.5. Additionally, it leads in multimodal understanding, achieving a score of 81.7% on the MMMU benchmark.

To better visualize these performance metrics, the following table compares Gemini 2.5 Pro’s scores on key benchmarks against some of its main competitors:

| Benchmark | Gemini 2.5 Pro Score | OpenAI o3-mini Score | Anthropic Claude 3.7 Sonnet Score | DeepSeek R1 Score | |

|---|---|---|---|---|---|

| LMArena | #1 | – | – | – | – |

| AIME 2025 | 86.7% | 86.5% | – | – | – |

| GPQA Diamond | 84.0% | – | 75% | – | 80.2% |

| Humanity’s Last Exam | 18.8% | 14% | 8.9% | 8.6% | – |

| SWE-bench Verified | 63.8% | – | 70.3% | – | – |

| LiveCodeBench v5 | 70.4% | 74.1% | – | – | – |

| Aider Polyglot | 74.0% | – | 64.9% | – | – |

| MRCR (128k context) | 91.5% | 36.3% | – | – | – |

| MMMU | 81.7% | – | 75% | – | – |

This data underscores Gemini 2.5 Pro’s strengths in reasoning, long-context understanding, and multimodal tasks, while also highlighting areas where competition remains strong, particularly in certain coding benchmarks.

Accessing the Power: Availability and How to Use Gemini 2.5 Pro

For Everyday Users: Free Access with Limitations

In a move that surprised many, Google began rolling out the experimental version of Gemini 2.5 Pro to all free Gemini app users in late March 2025. This decision reflects Google’s ambition to make its most advanced AI model accessible to a wider audience for exploration and feedback. However, this free access comes with certain limitations, primarily in the form of tighter rate limits for non-subscribers. While this allows users to experience the capabilities of Gemini 2.5 Pro, heavy or frequent usage may be restricted.

Enhanced Access for Subscribers: Gemini Advanced

For users requiring more extensive access and enhanced capabilities, Google offers the Gemini Advanced subscription, which is part of the Google One AI Premium plan priced at $19.99 per month in the United States. Subscribers to Gemini Advanced benefit from expanded access to Gemini 2.5 Pro and a significantly larger context window compared to free users. This tier also provides integration with Google Workspace applications and the ability to upload files for analysis, offering a more powerful and versatile experience for professionals and users with demanding needs.

For Developers and Enterprises: Google AI Studio and Vertex AI

Developers and enterprises can also harness the power of Gemini 2.5 Pro through its availability in public preview within the Gemini API in Google AI Studio. Google has reported significant enthusiasm and early adoption among developers. Furthermore, the model is slated to be integrated into Vertex AI, Google’s machine learning platform, in the near future. This integration will provide developers with a robust platform for deploying and scaling applications powered by Gemini 2.5 Pro. In a related move, Google is discontinuing the Gemini 2.0 Pro preview, signaling a clear focus on the newer and more advanced 2.5 Pro model.

Decoding the Cost: Pricing Structure for Developers

Google has officially released the API pricing for Gemini 2.5 Pro, positioning it as its most expensive AI model to date. The pricing structure, which took effect on Friday, April 4th, 2025 , is tiered based on the length of the prompts used:

- For prompts containing 200,000 tokens or less, the cost is $1.25 per million input tokens and $10 per million output tokens.

- For prompts exceeding 200,000 tokens (up to the maximum of 1,048,576 tokens), the pricing increases to $2.50 per million input tokens and $15 per million output tokens.

This pricing model places Gemini 2.5 Pro in an interesting position within the competitive landscape. While the input token pricing for shorter prompts is similar to that of Gemini 1.5 Pro, the output token costs are notably higher, especially for longer prompts. Compared to other leading models, Gemini 2.5 Pro is more affordable than GPT-4o for shorter prompts and also undercuts the price of Claude 3.7 Sonnet within comparable context windows. However, it is more expensive than more budget-friendly options like OpenAI’s o3-mini and Google’s own Gemini 2.0 Flash. You can find more detailed information about The True Cost of OpenAI’s o3

Rate limits for the paid API also vary depending on the user’s tier, ranging from 150 requests per minute in the basic tier to 2,000 requests per minute for higher-spending users. The free tier in Google AI Studio remains limited to 5 requests per minute and 25 requests per day. It’s also important to note that the cost of “thinking” tokens, which represent the model’s internal processing during reasoning, is included in the output token count. This means developers need to factor in these additional tokens when estimating the cost of using the model for complex tasks. Despite being Google’s most expensive AI model, the initial response to the pricing from developers has been largely positive, suggesting that they perceive the performance gains and advanced capabilities as justifying the cost.

To provide a clearer comparison of the pricing, the following table outlines the cost per million tokens for input and output across several key AI models:

| AI Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

| Gemini 2.5 Pro (<= 200k) | $1.25 | $10.00 |

| Gemini 2.5 Pro (> 200k) | $2.50 | $15.00 |

| Gemini 1.5 Pro (<= 128k) | $1.25 | $5.00 |

| Gemini 1.5 Pro (> 128k) | $2.50 | $10.00 |

| GPT-4o | $2.50 | $10.00 |

| Claude 3.7 Sonnet | $3.00 | $15.00 |

| OpenAI o3-mini | $1.10 | $4.40 |

| Gemini 2.0 Flash | $0.10 | $0.40 |

This comparison highlights the premium pricing of Gemini 2.5 Pro, reflecting its advanced capabilities and Google’s assessment of its value proposition in the market.

Real-World Impact: Potential Use Cases and Applications

Empowering Developers with Advanced Capabilities

The unique features of Gemini 2.5 Pro, particularly its massive context window and enhanced reasoning abilities, offer significant advantages for developers. The ability to analyze entire codebases at once, along with comprehensive documentation, allows for more efficient debugging, better architectural planning, and the generation of higher-quality code with fewer errors. Developers can leverage this model for a wide range of tasks, including in-depth codebase analysis, automated documentation generation, building complex web applications with production-ready code, implementing intricate architectural patterns, and even generating functional applications like video games from simple prompts.

Transforming Small Businesses with Accessible AI

The increased accessibility of sophisticated AI tools like Gemini 2.5 Pro, coupled with its competitive pricing tiers, presents a significant opportunity for small businesses to enhance their operations. These businesses can leverage the model’s natural language processing capabilities to improve customer interactions through chatbots and virtual assistants, leading to faster response times and increased customer satisfaction. Furthermore, Gemini 2.5 Pro can assist with smarter data analysis, providing valuable insights into consumer behavior and sales trends, enabling business owners to make more informed decisions. The automation of routine tasks, such as invoicing and follow-up emails, can also free up valuable time for owners and employees to focus on strategic growth initiatives. However, it is crucial for small businesses to develop well-defined strategies for AI deployment, ensure effective data management, and adhere to privacy regulations to maximize the benefits of this technology.

Advancing Research and Innovation Across Industries

The strong performance of Gemini 2.5 Pro in reasoning, mathematics, and science suggests its potential to significantly contribute to research and innovation across various industries. Its ability to process and understand information from multiple modalities could be invaluable for analyzing complex datasets in fields like medicine, materials science, and environmental research, potentially leading to new discoveries and breakthroughs. The model’s large context window is particularly beneficial for analyzing extensive research papers and datasets, enabling researchers to extract insights and identify connections that might be missed through traditional methods.

Google’s Strategic Vision: AI for Everyone

Google’s decision to make the experimental version of Gemini 2.5 Pro available to all free Gemini app users underscores its strategic goal of democratizing access to advanced AI technology. The remarkable 80% increase in usage of Google’s AI Studio and Gemini API in March 2025 alone highlights the significant demand for this type of technology. This move aligns with Google’s broader vision of integrating powerful AI capabilities into the hands of developers, businesses, and individuals, fostering innovation and progress across various sectors. By actively soliciting and incorporating feedback from developers and users, Google aims to continuously improve its Gemini models, ensuring that they meet the evolving needs of a rapidly advancing technological landscape.

Conclusion: The Future is Intelligent with Gemini 2.5 Pro

Google’s Gemini 2.5 Pro represents a significant leap forward in the field of artificial intelligence. Its enhanced reasoning capabilities, native multimodality, unprecedented context window, and strong performance across critical benchmarks position it as a leading AI model with the potential to transform various industries. The accessibility of Gemini 2.5 Pro to a wide range of users, from free tier experimenters to Gemini Advanced subscribers and professional developers, underscores Google’s commitment to making advanced AI a widely available resource. While its pricing positions it as Google’s most expensive AI model, the perceived value and performance gains appear to resonate positively with the developer community. As Google continues to refine and expand its Gemini family of models, Gemini 2.5 Pro serves as a powerful indicator of the increasingly sophisticated and versatile AI technologies that will shape our future.