Now Reading: Microsoft Warns of AI Capacity Constraints Amid Surging Demand

-

01

Microsoft Warns of AI Capacity Constraints Amid Surging Demand

Microsoft Warns of AI Capacity Constraints Amid Surging Demand

Table of Contents

Introduction

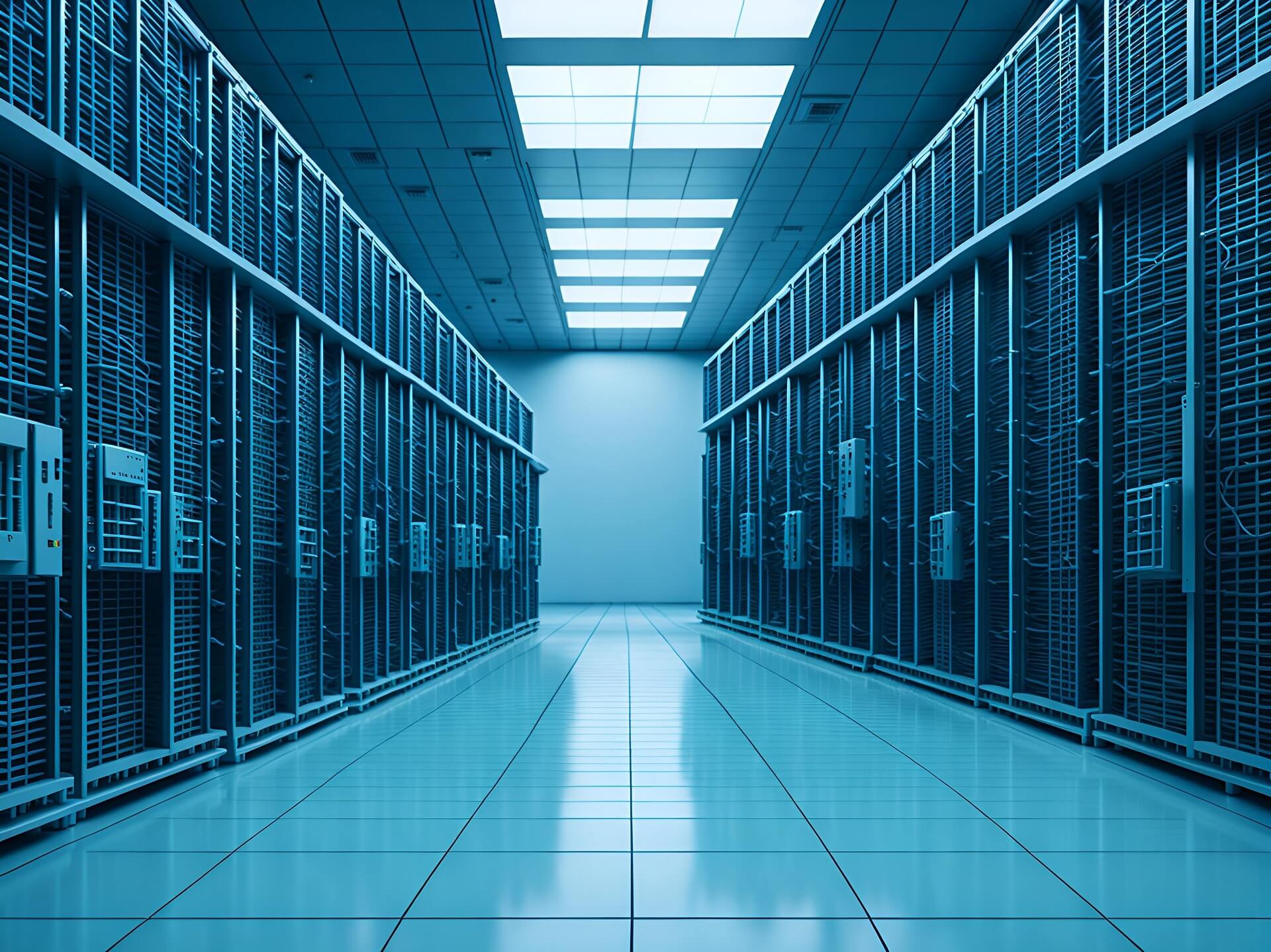

In a recent earnings call, Microsoft has cautioned stakeholders about impending AI capacity constraints, a consequence of the unprecedented demand for artificial intelligence services outpacing the company’s current infrastructure capabilities. This development underscores the challenges tech giants face in scaling their operations to meet the rapid adoption of AI technologies.

The warning comes on the heels of a strong financial performance, where Microsoft exceeded revenue expectations, largely driven by AI-powered cloud demand. According to Reuters, the company’s focus on AI is already yielding financial returns—but it’s also putting pressure on its physical infrastructure.

The Surge in AI Demand

We’re living through what can only be called an AI revolution. Businesses of all sizes—from startups to Fortune 500s—are deploying artificial intelligence to automate tasks, improve customer experience, and optimize decision-making. Microsoft’s Azure platform has become a key player in powering this transformation.

One standout driver of this surge is Microsoft Copilot, the AI-powered assistant now deeply embedded in tools like Word, Excel, Outlook, and Teams. As organizations adopt Copilot across their workflows, it’s significantly increasing the demand for cloud computing and AI model inference on Azure’s infrastructure.

With Azure usage skyrocketing, existing data centers are feeling the pressure. The demand is so intense that it’s directly contributing to notable AI capacity constraints, especially in GPU availability and cooling systems. This raises serious questions about how quickly Microsoft—and the industry as a whole—can scale infrastructure to meet demand.

If you’re curious about the environmental and operational impact of this AI growth, read our breakdown of AI data centers and electricity demand, where we explore how the rise of tools like Copilot is putting new stress on the global energy grid.

Microsoft’s Infrastructure Expansion Efforts

To respond to this overwhelming demand, Microsoft is making big moves. The company has committed $80 billion in infrastructure spending this fiscal year, half of which is earmarked for U.S. facilities. But building and equipping new data centers is not a plug-and-play solution—it can take years before they’re fully operational.

And that’s assuming there are no regulatory hurdles, no supply chain delays, and no energy access issues—all very real concerns in today’s global climate.

This high-stakes race to keep pace with AI demand is reminiscent of what we’ve seen with Elon Musk’s xAI funding, another aggressive push into the AI space that’s heavily reliant on infrastructure readiness.

Strategic Adjustments and Lease Cancellations

Interestingly, while expanding its buildout, Microsoft has also reportedly canceled leases for a couple hundred megawatts of data center space—roughly the equivalent of two full-scale data centers. The company maintains these cancellations are not directly tied to current constraints, but they signal a shift in how Microsoft is planning its AI infrastructure.

It’s a strategic pivot: instead of simply expanding everywhere, Microsoft is refining where and how it grows. The company is focusing more on building from the ground up in key geographies that support its long-term roadmap, rather than relying on leasing short-term AI capacity constraints that may not be optimized for AI workloads.

Global Expansion and Future Outlook

In addition to domestic infrastructure, Microsoft is thinking globally. The tech giant recently announced that it opened new data centers in 10 countries across four continents—expanding its Azure availability zones to better serve local markets and reduce latency.

This worldwide footprint is a tactical move to ease AI capacity constraints and meet regional compliance requirements. But despite these proactive steps, Microsoft still expects tight capacity in the coming quarter, highlighting how fast AI demand is evolving.

These new data centers will take time to reach full capacity. In the meantime, organizations looking to build on Azure may experience provisioning delays or have to queue for GPU access.

Implications for the Tech Industry

What’s happening at Microsoft is part of a much larger trend. The demand for generative AI, predictive modeling, and real-time analytics is growing faster than data centers can physically scale.

This has major implications not just for Microsoft but for the entire tech ecosystem. We’re talking about challenges in energy sourcing, thermal management, GPU allocation, and skilled workforce availability. Companies that can solve these issues—either through hardware innovation, renewable energy integration, or optimized software stacks—will lead the next chapter of the AI boom.

Conclusion

Microsoft’s candid warning about AI capacity constraints serves as a wake-up call: even the biggest cloud providers are scrambling to keep up with the explosive growth in AI.

Yes, Microsoft is investing billions. Yes, they’re opening data centers around the world. But that doesn’t mean the road ahead is smooth. For businesses, developers, and stakeholders in the AI space, this is a clear sign to plan ahead, diversify infrastructure strategies, and stay informed.

The AI future is bright—but only if we build fast enough to power it.