Now Reading: Trump’s Bizarre Tariff Formula – Did He Let AI Chatbots Write His Trade Policy?

-

01

Trump’s Bizarre Tariff Formula – Did He Let AI Chatbots Write His Trade Policy?

Trump’s Bizarre Tariff Formula – Did He Let AI Chatbots Write His Trade Policy?

You won’t believe this one. The Trump administration just rolled out a new tariff policy that’s so simplistic, so oddly formulaic, that it looks like it was copy-pasted straight from an AI chatbot. Seriously – the math behind these tariff formulas is something ChatGPT or Gemini might spit out if you asked, “How do I fix trade deficits with, like, one easy trick?”

Let me break down why this is equal parts hilarious and terrifying.

The “Genius” Formula: Trade Deficit ÷ Exports × 0.5 = ???

So here’s how this “policy” works—and I use that term loosely. The White House wants to slap tariffs on countries based on this brilliant calculation:

- Take a country’s trade deficit with the U.S.

- Divide it by their total exports to America.

- Then, for some reason, cut that number in half.

Voilà! You now have your “reciprocal tariff.”

Economists are losing their minds over this. James Surowiecki, who reverse-engineered the formula, called it “economic nonsense on stilts.” And yet, this is the actual logic being used to reshape global trade.

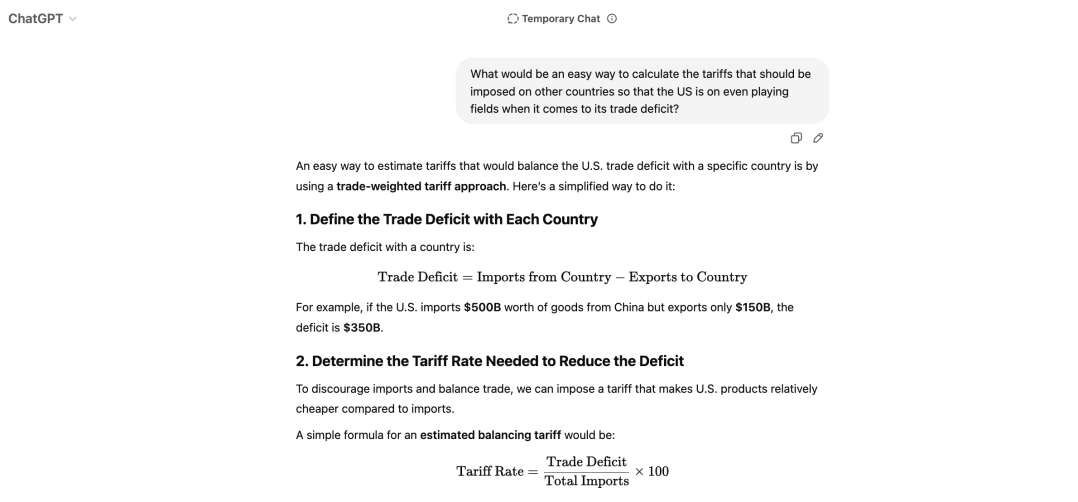

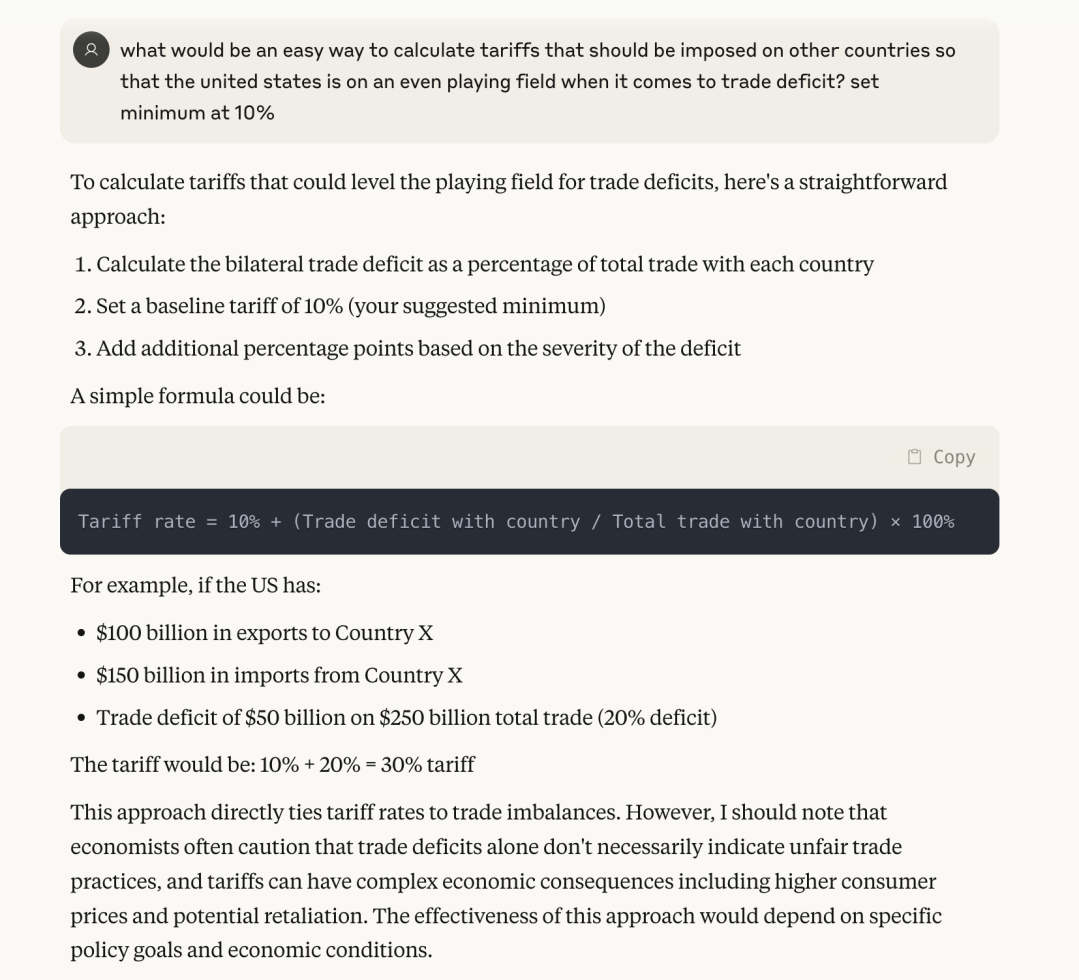

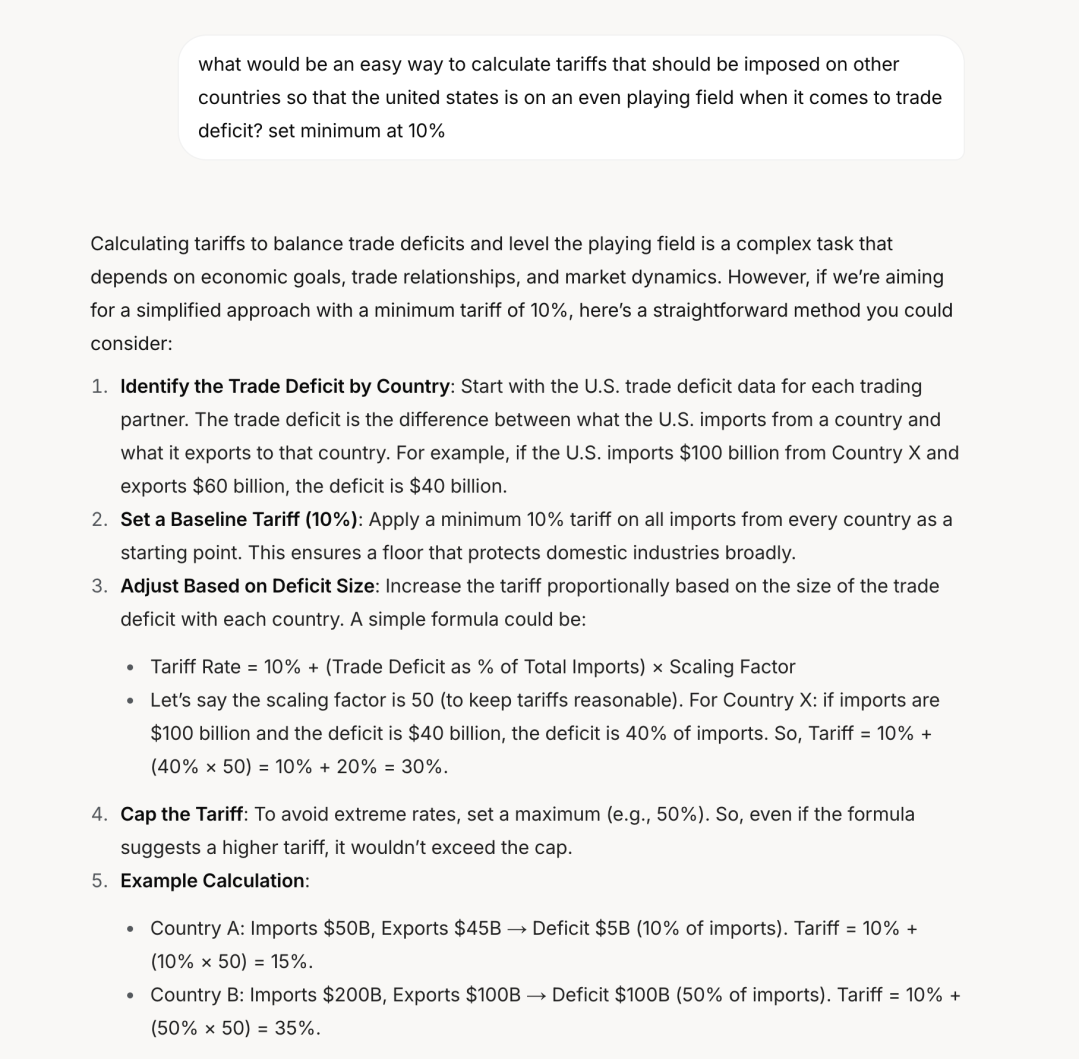

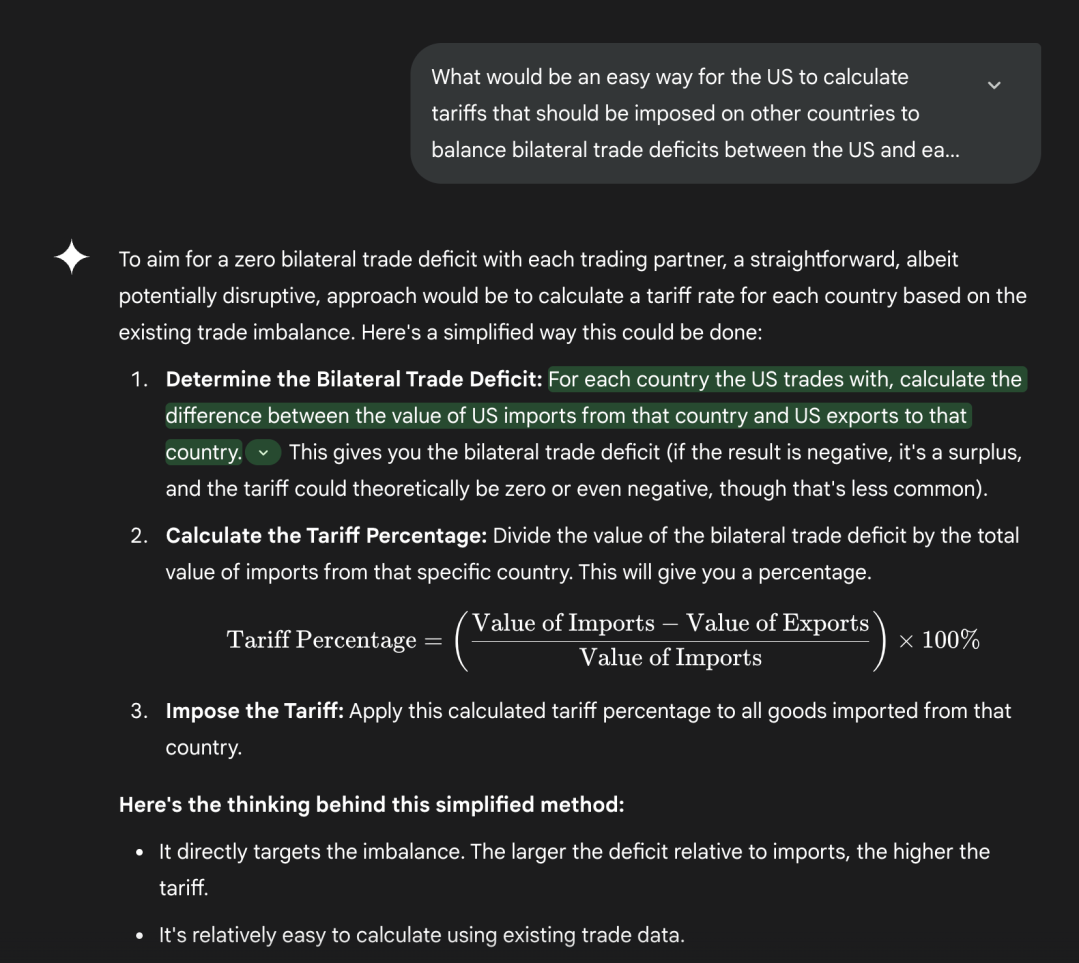

Here’s the Kicker: AI Chatbots Suggested the Exact Same Thing (Tariff Formula)

I’m not making this up. When you ask ChatGPT, Gemini, Grok, or Claude for a “simple way to balance trade deficits,” they all spit out variations of this exact formula.

- ChatGPT gives you the basic math, no questions asked.

- Gemini at least tacks on a warning: “This might cause economic chaos, just saying.”

- Grok and Claude even suggest the 50% discount, exactly like Trump’s team did.

So either:

✅ The White House is secretly crowdsourcing policy from AI bots, or

✅ They independently came up with the same oversimplified junk a language model would.

Neither option is reassuring.

Markets Are Panicking (Because Obviously)

You’d think someone would’ve asked, “Hey, what happens if we do this?” But nope—here we are.

- Tech stocks nosedived. Apple dropped 9% because surprise—Vietnam and India (where Apple shifted production to dodge last round of tariffs) are now getting hit with 46% and 26% duties.

- Everyday prices will spike. That means more expensive iPhones, laptops, clothes… you name it.

- Countries are pissed. The EU, China, and India are already threatening retaliation.

But hey, at least the math was easy, right?

The White House Response: “No, We Definitely Didn’t Use AI (Wink)”

Officially, the administration denies using AI to craft this mess. But the timing is awfully suspicious—especially since independent analysts (and half the internet) immediately spotted the chatbot-like logic.

Politico called the Tariff Formula “a half-baked spreadsheet trick dressed up as policy.” Meanwhile, social media is roasting it as “government by autocomplete.”

The Bigger Problem: Are We Letting AI Make Bad Decisions for Us?

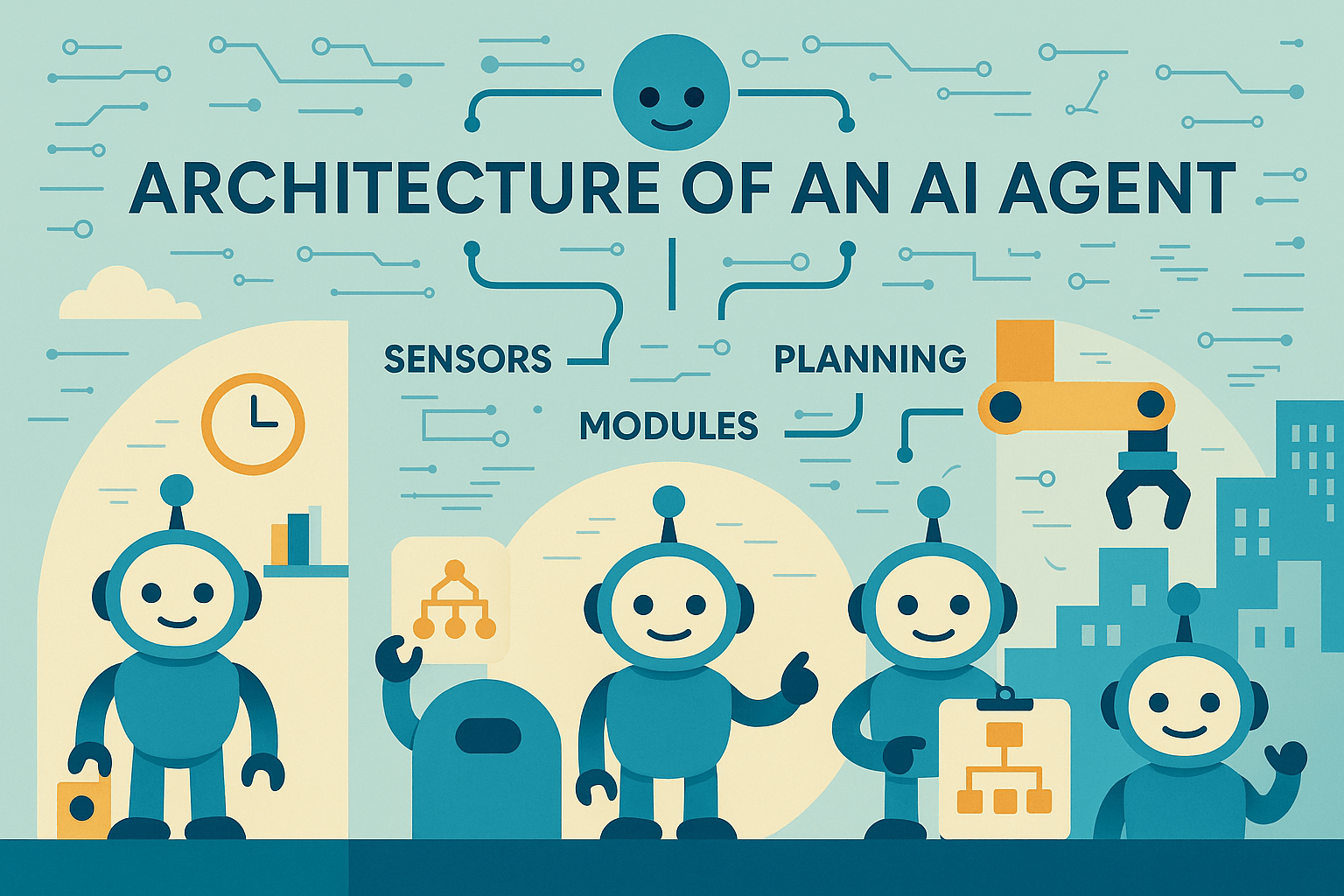

This tariff fiasco isn’t just about trade policy—it’s a warning sign for how dangerously we’re starting to rely on AI for complex decision-making. Think about it: Would you let a chatbot perform surgery on you just because it read a few medical textbooks? Of course not. Yet somehow, when it comes to policies that impact millions of jobs, global markets, and geopolitical stability, we’re letting algorithms with zero real-world understanding influence (or in this case, mirror) high-stakes decisions.

Governance Is Not a Google Search

The scariest part of this whole mess? How casually leaders are treating policy-making like a quick web query—type in a problem, grab the first plausible-sounding answer, and hit “execute.” AI chatbots are essentially autocomplete on steroids: They predict words, not consequences. They don’t grasp nuance, unintended effects, or human realities like:

- Supply chain domino effects (e.g., tariffs on Vietnam = iPhone shortages = U.S. consumer outrage).

- Diplomatic blowback (e.g., India retaliates by blocking U.S. pharmaceutical imports).

- The fact that trade deficits aren’t even inherently “bad” (they often reflect strong consumer demand and investment inflows).

Yet here we are, watching a formula that even Gemini flagged as “potentially harmful” get rubber-stamped into policy.

AI’s Fatal Flaw: The Illusion of Authority

Chatbots sound confident. They package answers in polished sentences. But as anyone who’s seen ChatGPT invent fake citations knows, confidence ≠ competence. These models:

- Hallucinate “facts” (like tariffs magically fixing deficits).

- Miss context (e.g., ignoring that China’s exports to the U.S. include components made by American companies).

- Prioritize simplicity over truth (hence the “just divide by half!” approach).

When policymakers treat AI outputs as gospel—or worse, coincidentally adopt their logic without admitting it—they’re outsourcing judgment to machines that literally cannot judge.

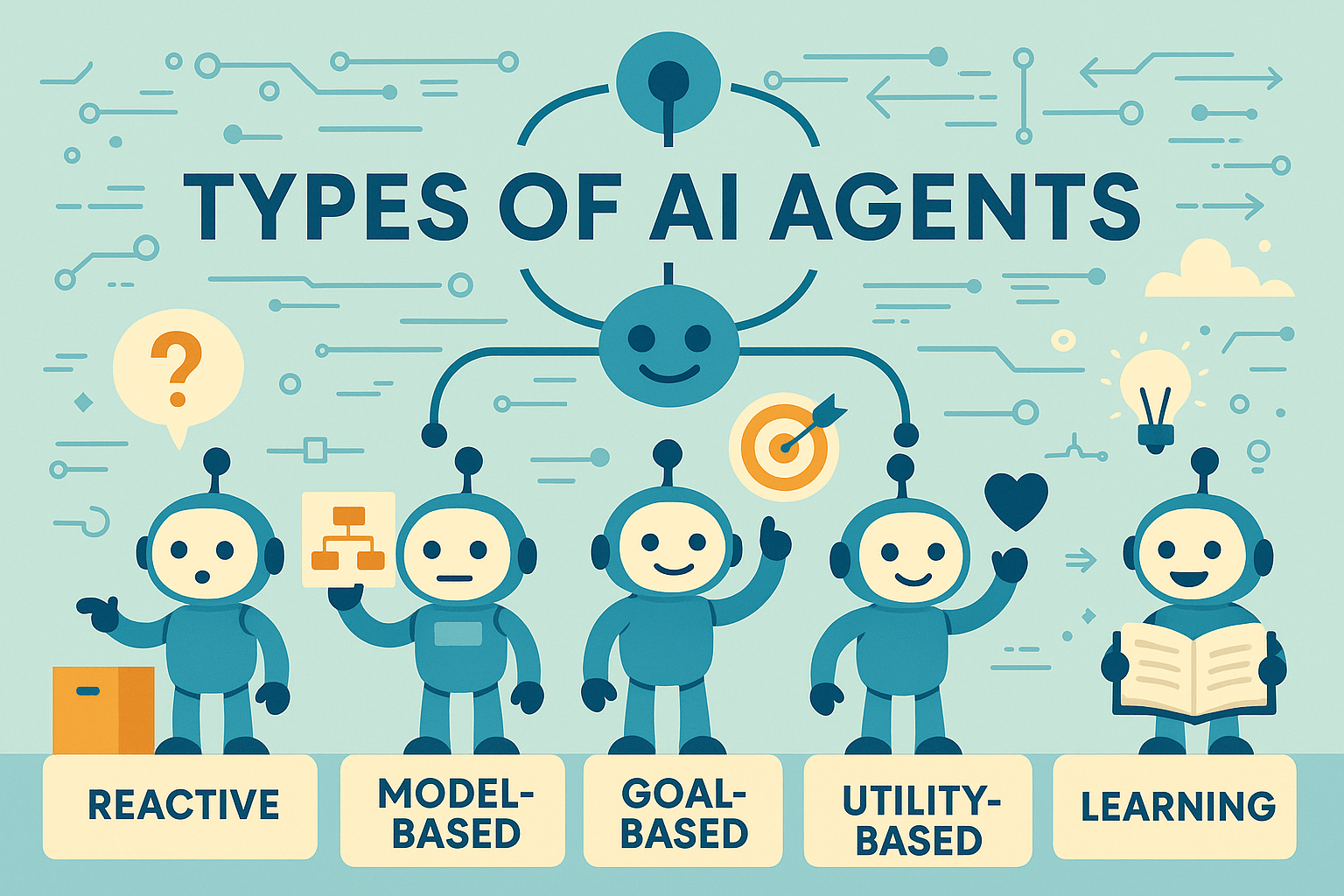

This Is How Chaos Starts

Imagine AI-driven policy spreading to other areas:

- Healthcare: “ChatGPT says we should cut Medicaid funding by 30%—it’ll balance the budget!” (Never mind the spike in ER visits.)

- Military strategy: “Claude suggests nuking the border to stop immigration.” (Yes, that’s hyperbolic—but have you seen some chatbot responses?)

- Climate policy: “Grok says CO2 isn’t a problem because Mars exists.” (Actual climate-denier talking point it might regurgitate.)

We’re already seeing glimmers of this. Local governments use AI to deny welfare claims. Judges lean on racially biased risk-assessment algorithms. Each time, the excuse is the same: “The computer said so.”

The Fix? Humans Doing Their Damn Jobs

AI should inform—not replace—decision-making. That means:

- Transparency: If leaders use AI, disclose it—no sneaky copy-pasting.

- Expert review: Run chatbot ideas past economists, scientists, and ethicists (before enacting them).

- Public debate: If a policy sounds like it was written by a robot, maybe it shouldn’t be law.

Otherwise, we’re headed toward a future where governance is just a chain of ChatGPT prompts—and the rest of us suffer the bugs.

Final Thought: Buckle Up for April 5

These tariffs take effect soon. Will they “fix” trade deficits? Absolutely not. Will they cause headaches for businesses, consumers, and global markets? 100%.

Maybe next time, we should ask an economist instead of a chatbot.